Training Feed Forward Neural Network(FFNN) on GPU — Beginners Guide | by Hargurjeet | MLearning.ai | Medium

Make Every feature Binary: A 135B parameter sparse neural network for massively improved search relevance - Microsoft Research

PyTorch-Direct: Introducing Deep Learning Framework with GPU-Centric Data Access for Faster Large GNN Training | NVIDIA On-Demand

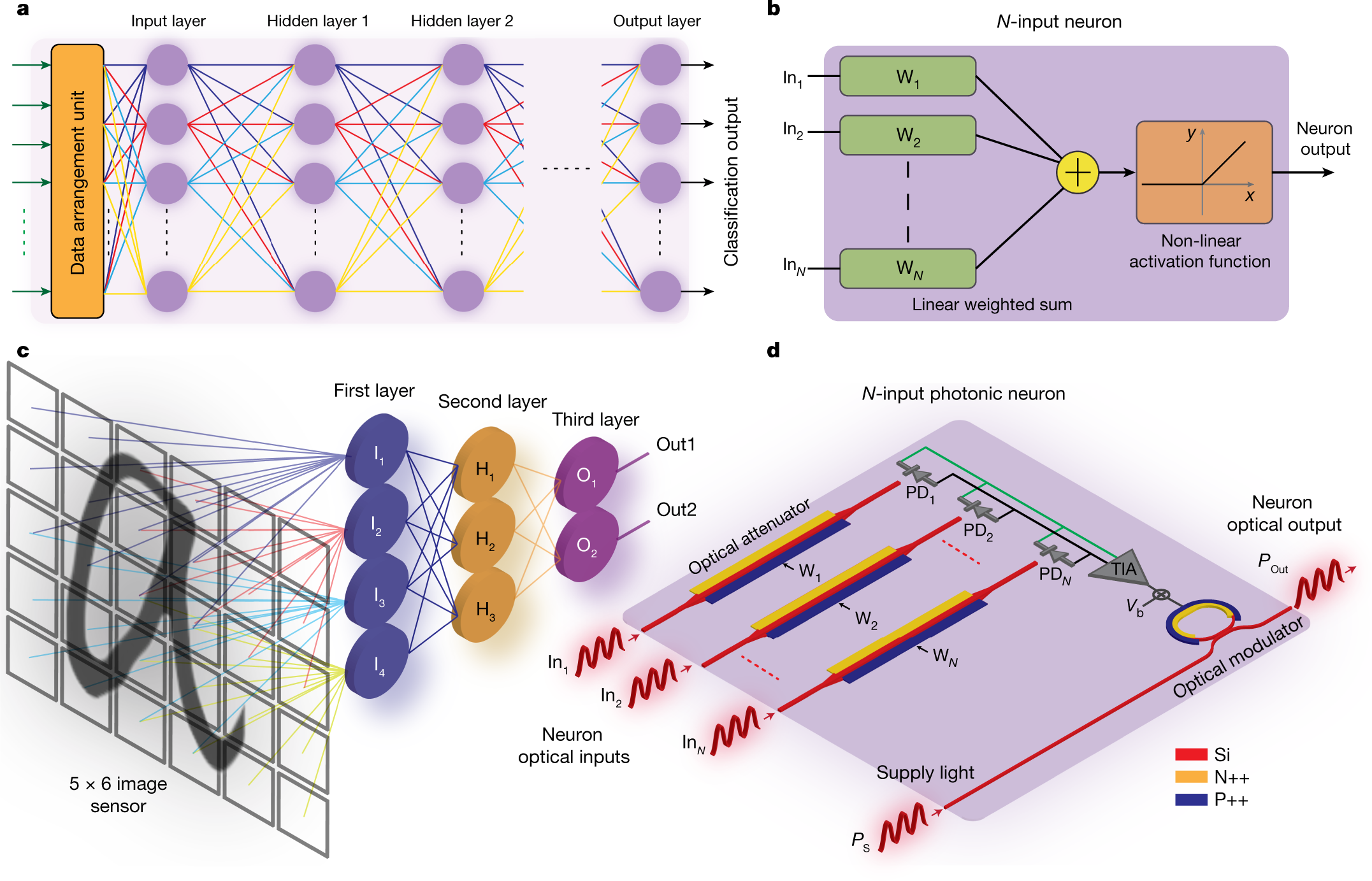

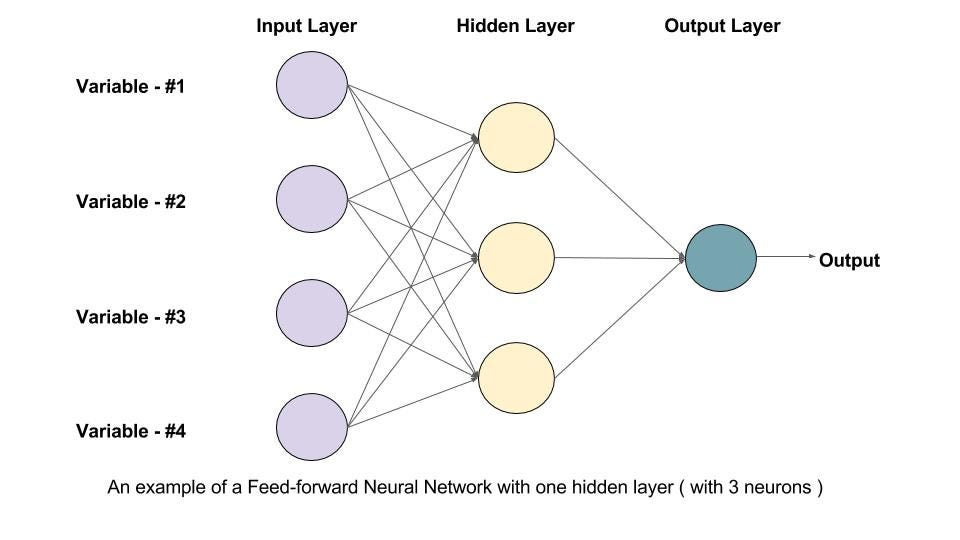

Multi-Layer Perceptron (MLP) is a fully connected hierarchical neural... | Download Scientific Diagram